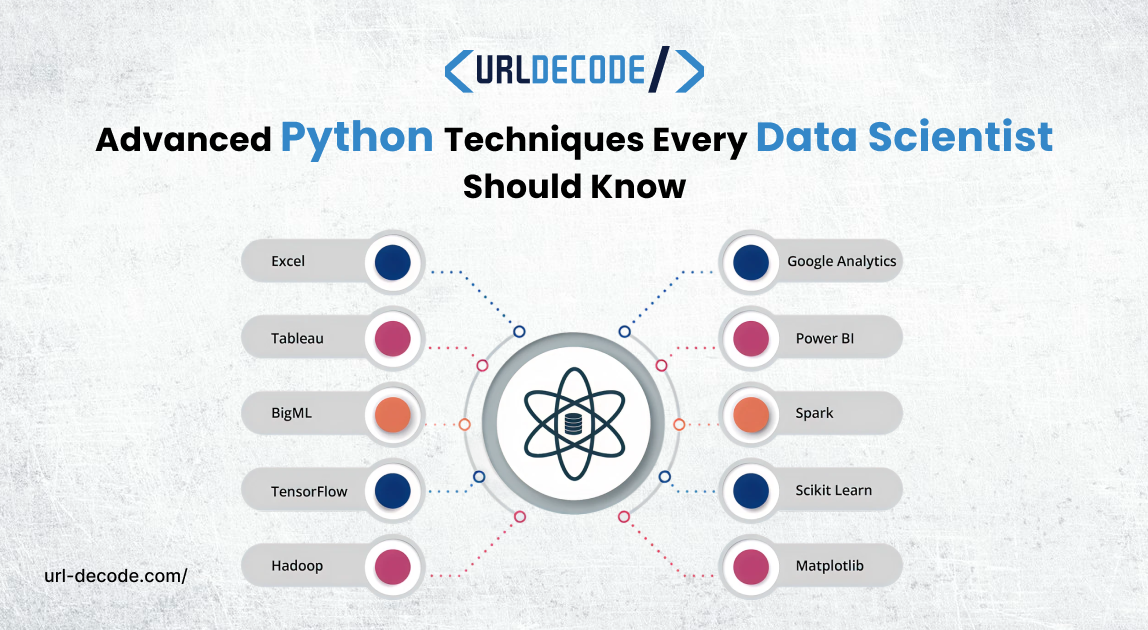

Advanced Python Techniques Every Data Scientist Should Know

If you've completed the basic concepts of Python and are seeking the next step into real-world data science work, it's time to ramp up your toolbox. If you are serious about being a top data scientist, you realize that working in Python is not just about writing loops and uploading CSV files (i.e., learning the syntax), but it's about working smarter, faster, and more efficiently using powerful features of the language and libraries available in Python.

In this article, we're leveraging advanced Python techniques that are well beyond the basic tutorials - techniques you will see in any decent Data Science Course, on the job, and in competitions like Kaggle.

Why Advanced Python Matters in Data Science?

As data science continues to expand, professionals will face increasingly complex challenges regarding big data, deep learning, and real-time analytics. Basic knowledge of Python may be sufficient for the majority of use cases when manipulating data, but by gaining advanced knowledge in Python, they can perform Python skills at a much higher level. After a data scientist learns about object-oriented programming, decorators, generators, and context managers, they can begin writing scalable, efficient, and reusable code. This is a key skill to have when developing production-level projects.

Efficient Data Handling and Performance Optimization

Advanced Python provides skills and techniques to maximize performance - e.g., multi-threading, multi-processing, and asynchronous programming - all key skills when either working with large datasets or deploying models that process data in real-time. Leaning tools such as NumPy, pandas, and Dask become much faster and more memory-efficient when their users have knowledge of Python internals.

Custom Algorithm Development and Model Building

Off-the-shelf models and functions may not suffice in real-world use cases. With advanced Python capabilities, data scientists can build their own algorithms to support business needs and customize machine learning pipelines with specific goals in mind. This could involve building custom transformers in scikit-learn, developing loss functions in TensorFlow or PyTorch, or modifying machine learning algorithms to address professional domain problems.

Integration with Broader Tech Ecosystems

Data science and data scientists rarely work in isolation. If you develop an end-to-end machine learning project that goes beyond data engineering and exploration and includes an end product (like deploying an application to the cloud on AWS Lambda or Google Cloud Functions), making APIs using Flask or FastAPI, or if automated workflows using Airflow are needed, advanced Python capabilities are essential. For example, you can build apis to apply on jobs that automate job submissions, resume uploads, and follow-ups at scale. In both these areas, advanced Python capabilities bridge the gap between data science models and business environments.

1. Functional Thinking: Why Less Is More

Functional thinking in programming prioritizes well-structured, short, clean, and reliable code. Functional programming discourages using changing states and mutable data, and incentivizes pure functions, which are functions that always produce the same output when given the same input and do not have side effects. Purity in code is always to be desired, since the resulting code can be predictable, and thus more straightforward and less buggy. The resulting code is also clearer and thus easier for you to understand and maintain.

Clarity Through Simplicity

If a developer is using a functional thinking approach, he likely spends less time writing code than if he is using a more procedural approach. This is not simply for the sake of minimalism--although that can also be somewhat desirable--but more because they are deliberate about writing and using functions. Using functions, for example, like map(), filter(), and reduce(), allows you to perform powerful changes to a data set without introducing lots of nesting, temporary names, or iterative approaches. The result is shorter code that is cleaner and easier to reason about.

Scalability and Reusability

Functional programming is about immutability and composability, meaning that a function can often be reused in another project with little to no change. This modular approach improves scalability, testing, and long-term maintainability, which is especially important when building data-driven applications that evolve over time and require flexible Python Web Development Solutions to adapt to growing system demands.

2. Writing Smarter, Not Longer

More lines of code do not mean a better solution in programming. Writing smarter code means favoring clarity, efficiency, and intent over complexity. This may be using a lookup with a dictionary as opposed to many if statements or a list comprehension instead of many loops, but the goal is to represent the logic in as few lines, while still preserving readability.

The Power of Pythonic Practices

Python's philosophy encourages a "smarter" approach based on readability and simplicity. Idiomatic Python (or "pythonic" code) embraces built-ins, expressive syntax, and elegant patterns such as unpacking, lambda functions, and comprehensions. These patterns minimize boilerplate and promote maintainability.

Maintainability and Collaboration

Shorter, smarter code is easier for machines, and shorter, smarter code is easier for people as well. When code is clear and compact, it helps everyone on the team understand the logic faster, helps everyone find bugs quicker, and helps new members get on board with the least amount of friction. Intentional, well-structured code fosters collaboration and sustainability in long-term projects.

3. Lazy Evaluation: Process Big Data without Killing Your RAM

Lazy evaluation is a data processing strategy in which computations are deferred until results are actually needed. Instead of reading large volumes of data into memory at once and performing the processing that way, lazy evaluation either processes the data piece by piece or only loads the data that is needed, potentially using mechanisms that support reading only a small portion of a larger dataset, as opposed to loading the entire dataset in RAM.

Saving Memory with On-Demand Processing

Considerations for using lazy evaluation are most relevant in cases when working with big data attempts. If you attempt to load an entire dataset to RAM at once, the consequences can be a system crash or severely degraded performance. Lazy evaluation mitigates this by processing the data in only small chunks, which is all that most applications need. In the example of processing a file with a generator that can read line by line, the generator processes each line only when it is ready to pull, which significantly decreases memory usage in this example.

Scalability and Performance Gains

In addition to RAM savings, lazy evaluation typically provides performance improvements to overall system scalability. You can work with files very large in size or have data streams extracted from API results and know without waiting for the entire dataset to build that there is a plan to get data returned incrementally - making lazy evaluation an advantage when processing data in real-time analytical scenarios, processing log files, or designing data pipelines with responsiveness as a requirement.

4. Creating Reusable Logic: The Art of Wrapping and Layering

In software development, writing code that contains the exact same logic is not just tedious but also prone to errors. Logic that can be reused means that you can write that code once, and it can be used in multiple locations without copying and pasting. Writing less code and being more consistent has many advantages. This is especially relevant in the field of data science, where you can typically apply the same data transformations and checks across different points in a data science pipeline.

Wrapping with Functions and Decorators

One of the great features in Python is that you can “wrap” logic inside functions and decorators. Wrapping allows you to isolate any behaviour and allows you to test, re-use, and maintain that logic independent of any instance of it being used. Decorators provide a way to apply additional functionality (i.e., logging, validation, caching, etc.) on a separate layer with the goal of maintaining the original function's logic.

Layering for Cleaner Code Architecture

Because coding can be built in layers, we think of layers like the modular structure of building clean, functional code. Imagine a data pipeline broken into small functional pieces or layers: one for cleaning, one for transforming, and one for loading. Layers evolve independently, resulting in a code structure that is organized, tested, and easier to maintain.

5. Managing Context and Side Effects

Most programming languages allow for side effects. A side effect happens when a function modifies something in an environment outside of its local function environment. For example, a side effect could happen if a function changes a global variable, writes data into a file, saves data to a database, or sends an email request. Side effects are often necessary for practical coding, but generalized use of side effects is dangerous and can lead to bugs, unexpected behaviour, and problems debugging or testing code.

The Role of Context Management

Python is made for programmers and scientists, so it has built-in tools (like context managers) that help manage work with side effects in a very safe and efficient manner. The with statement lets you easily manage a resource to perform a side-effecting action, such as a file handler for a file, a database connection, or a lock within a resource-constrained environment. The advantage of using the with statement is that it manages resource(s) cleanly and predictably, so that resources are released when it is finished, even if an error happens during execution.

Writing Predictable, Testable Code

Reducing side effects can increase the predictability of your code. If a function is a pure function, it only generates output based on an input variable; it does not modify or affect (i.e., produce a side effect) anything external. It is much easier to test and reason about a function if it is a pure function. Using dependencies through context managers (as functions), dependency injection, and limiting functions.

6. Memory Efficiency: Doing More with Less

When scaling applications, memory use will ultimately dictate performance, especially when data sizes increase. If we handle memory management ineffectively, whether it becomes executed slowly, crashes, or leads to increased hardware costs, the ramifications can be real, costly, and frustrating. In data science and backend systems in particular (but certainly not limited to them), being more efficient with memory usage helps ensure your program continues to execute correctly, even with constrained resources.

Techniques for Efficient Code

Python has given developers many ways to be more efficient with memory. First, generators and iterators allow us to process data on the fly without the need to load whole datasets into memory. Second, data types can matter; sets and tuples can often lead to less overhead than lists and dictionaries if implemented properly. Libraries like NumPy have arrays that use less memory than native Python lists, and perform better when we are doing a number of numerical operations.

Avoiding Redundancy and Waste

Increasing memory efficiency also lies in good coding practices. We can often save on memory impact by avoiding unnecessary copies of larger datasets, reusing (and resetting) variables that are no longer needed, and, at the very least, using garbage collection or the built-in del to discard variables and objects we no longer need. To guide your optimization work, you can also use profiling tools to profile your current space complexity and find your memory hotspots. The memory profiler for Python is one such tool that shows where most of your memory is being used, and what you can do to alter your code to make it less memory-consuming through optimization.

7. Working with Text Data: Go Beyond Basics

Text data is everywhere: emails, social media, reviews, documents, and logs. For data scientists, working with text data is part of the job, especially in areas like sentiment analysis, natural language processing (NLP), and analyzing customer feedback. To truly get text-based insights, you need more than string operations.

Advanced Text Processing Techniques

Basic functions like split(), replace(), and find() are good to have, but when it comes to more complicated work, you will need more advanced functions. Functions in the re module (referred to as regular expressions) let you extract patterns, check for formats, and clean dirty text. First, the following natural language processing (NLP) steps can help with tokenization, stemming, lemmatization, and named entity recognition (NER modules).

Leveraging NLP Libraries

Python has a number of good libraries to help you analyze or manipulate text (e.g., NLTK, spaCy, and TextBlob). There are several possibilities available depending on the library you choose, which may include part-of-speech tagging, sentiment scoring, detecting language, or summarization. If you can take full advantage of NLP options in merged and cleaned text data, you will produce clean, structured data from unstructured raw text data that is ready for machine learning.

8. Thinking in Vectors and Matrices

Data science and machine learning are mainly about processing data quickly, not writing less code. Vectorized thinking is changing the mindset from working in traditional loops to one that has array and matrix operations acting on the entire object at once. This change in way of working is essential when using libraries like NumPy, pandas, and TensorFlow, as they are designed to optimize computations for their user transparently.

From Loops to Vector Operations

Using loops and iterating over your data is easier to understand than leveraging vectorised operations at scale, and it is also much slower as the data grows in size. Vectorised operations are orders of magnitude more efficient because they are typically written in low-level C, whereas the for loop example is often less efficient if written in Python. For example, if adding two lists for processing in Python (one for the first number and one for the second number in the list), if we look at iterating over two lists via a loop, it will be significantly slower than using NumPy for an element-wise expression to add the two lists in parallel. This also holds true for filtering, aggregating, and mathematical transformations.

Foundation for Machine Learning and Beyond

From the model perspective, many machine learning algorithms are predominantly mathematical in foundation (i.e., linear regression, neural networks, PCA, etc.) as they inherently rely on matrix mathematical operations to function at their core. Improving vectorization proficiency has the additional benefit of improving people's understanding of how models learn and ultimately make predictions.

9. Asynchronous Execution: Handle Multiple Tasks at Once

When a program uses sync execution, it can create a number of tasks while waiting for each task to finish. Unlike blocking the entire program (and waiting for a task to finish, which may involve reading a file, making an API call or querying a database) sync programming allows other tasks can run simultaneously to the initial task. This is advantageous because it shouldn't be too taxing on the system to have async calls - overall performance may be better by not blocking the initial task.

The Power of async and await in Python.

The asyncio library in Python has an async and await syntax built in, with tools available so that programmers can easily write and maintain async code. Not only do async and await make it easier for programmers to write asynchronous code visually and maintain it cleanly, but they also allow them to write non-blocking code that essentially behaves like synchronous code.

Ideal for I/O-Bound Workloads

Asynchronous programming works great for I/O bound activity - waiting for an external system to do the work (unlike waiting for the CPU). Whether making API calls, scraping data from a website, or reading files from the disk, async execution helps an application stay responsive and fast even when there is a large load on the system.

10. Testing and Reliability: The Hallmark of Production-Ready Code

In the relentless pace of development in software and data science, writing code that functions is just the start. The code for production must also function reliably. Testing will verify that your logic is operational as you expect, handles edge cases, and is not broken by changes made in the code. Testing builds confidence that, while the overall system works, small implementation bugs do not become catastrophic failures in real-time systems.

Types of Testing for Better Confidence

There are many forms of testing in Python, ranging from very simple unit tests to comprehensive integration and regression tests. Unit tests ensure that your individual functions and modules are validated. Integration testing services ensure that the components of your system work together properly. Regression tests ensure that you have not introduced a bug in your code. You can use a variety of tools, too, like unittest, pytest, or doctest, to write your tests easily and run them quickly.

Automated Testing and CI/CD Pipelines

Automated testing is critical in today’s rapidly evolving software development world, where teams leverage cloud technology to accelerate their collaborative workflows. In either case, embedding tests into a CI/CD pipeline will allow for continuous validation of your code before you deploy, reducing the likelihood of failure in production.

Final Thoughts

Learning Python is not just a simple checkbox. Learning Python is a craft. And like any other craft, there is a noticeable difference between novice-level work and professionally crafted work. If you are evaluating a Data Scientist Course, don't simply consider the course in terms of whether it teaches Python. Consider how it teaches Python. Is it focused only on syntax and loops?

Or, does it dive deeper into design thinking, system architecture, and performance? Advanced Python is where a good data scientist will become great. Advanced Python is where theory meets practical application.

Advanced Python is what separates building code (that runs) from building systems that scale/ adapt/ endure. In conclusion, it is not about whether you can learn Python; it's about whether you can learn how Python thinks and how to leverage that learning to build the right tools to solve the right problems faster and better.